Measuring Code Improvements in ASP.Net

on

I wrote a blog post titled The Four Pillars of a Good Software Developer that covered some of the topics that we, as developers, need to be mindful of when writing or reviewing code. Even if your team does code reviews, it can still be a challenge for us mere human beings to retain all the useful information in books like the three previously mentioned whilst following the context of the application being reviewed, looking out for code smells, potential performance issues, memory management etc. So yeah, it’s pretty hard.

Reality suggests that most code bases in production will have some technical debt which is why I’m a huge fan of incremental development metrics. Using metrics it’s possible to get some kind of visibility of a project improving with each release.

What tools can be used in a release/deployment cycle that will automate some of the heavy lifting and provide a “metric” that all stakeholders can see with each release as an indicator that the code base is getting “better”?

An Example of “Bad” Code

First, let's consider some less than ideal code. If you’ve been working with ASP.Net for long enough, you’ve undoubtedly seen/written code like this…yes, it’s the canonical GridView (DataGrid if you’re really old school) mess*:

The .ASPX

- <%@PageLanguage="C#"AutoEventWireup="true"CodeBehind="Default.aspx.cs"Inherits="WhatMakesGoodCode.Default"%>

- <!DOCTYPEhtml>

- <htmlxmlns="http://www.w3.org/1999/xhtml">

- <headrunat="server">

- <title>What Makes Good Code Good?</title>

- </head>

- <body>

- <formid="form1"runat="server">

- <div>

- <asp:GridViewID="GridView1"runat="server"AutoGenerateColumns="False"OnRowDataBound="GridView1_OnRowDataBound"CellPadding="8">

- <Columns>

- <asp:BoundFieldDataField="ProductTitle"HeaderText="Product"/>

- <asp:BoundFieldDataField="Description"HeaderText="Desc."/>

- <asp:BoundFieldDataField="Price"HeaderText="Wholesale Price"/>

- <asp:BoundFieldDataField="Stock"HeaderText="No. in Stock"/>

- <asp:BoundFieldDataField="ReleaseDate"HeaderText="Release Date"/>

- <asp:TemplateFieldHeaderText="RRP">

- <ItemTemplate>

- <asp:Labelrunat="server"ID="RRP"></asp:Label>

- </ItemTemplate>

- </asp:TemplateField>

- </Columns>

- </asp:GridView>

- </div>

- </form>

- </body>

- </html>

The Code Behind:

- using System;

- using System.Collections.Generic;

- using System.Data;

- using System.Data.SqlClient;

- using System.Linq;

- using System.Web;

- using System.Web.UI;

- using System.Web.UI.WebControls;

- namespace WhatMakesGoodCode

- {

- public partial class Default : System.Web.UI.Page

- {

- protected void Page_Load(object sender, EventArgs e)

- {

- SqlConnection sqlConnection1 = newSqlConnection(@"Data Source=(localdb)\v11.0;Initial Catalog=GoodCodeStore;Integrated Security=True;MultipleActiveResultSets=True");

- SqlCommand cmd = newSqlCommand("SELECT * FROM PRODUCTS", sqlConnection1);

- sqlConnection1.Open();

- this.GridView1.DataSource = cmd.ExecuteReader(CommandBehavior.CloseConnection);

- this.GridView1.DataBind();

- sqlConnection1.Close();

- }

- protected virtual void GridView1_OnRowDataBound(object sender, GridViewRowEventArgs e)

- {

- if (e.Row.RowType == DataControlRowType.DataRow)

- {

- Label Label1 = (Label) e.Row.FindControl("RRP");

- /*

- * Some basic (silly?) logic for the purposes of an example.

- * Logic will markup the RRP by 35% if there are less than 25 copies in stock and the release date is this year.

- * Else, it will add 25% to the

- */

- if (int.Parse(e.Row.Cells[3].Text) < 25 && DateTime.Parse(e.Row.Cells[4].Text).Year == DateTime.Now.Year)

- {

- Label1.Text = (decimal.Parse(e.Row.Cells[2].Text) + (decimal.Parse(e.Row.Cells[2].Text) * (decimal)0.35)).ToString("N2");

- }

- else

- {

- Label1.Text = (decimal.Parse(e.Row.Cells[2].Text) + (decimal.Parse(e.Row.Cells[2].Text) * (decimal)0.25)).ToString("N2");

- }

- }

- }

- }

- }

* It’s not really THAT bad. It’s not ideal for larger applications with a lifespan longer than 12 months.

If we apply automated tools to this application, they would likely blow up with errors, right? Let’s see…

My vanilla setup covers (in order of priority):

- Test Coverage

- Measuring Complexity

- Basic Correctness & Static Code Analysis

- Style Consistency

If there is a budget for 3rd party tools:

- Feature Rich Code Analysis (which could incorporate some or all of the previous points)

Test Coverage

There are no unit tests for this project. So code coverage is not applicable.

This is a simple metric to measure. The aim is to increase test coverage percentage of the code base with each release. 100% coverage is probably not realistic or pragmatic. Anything above 60% is VERY good going. Although in some areas it would be more beneficial to have 100% coverage of a more complex part of an application whilst the wider coverage may be less than 50%.

There are various options for measuring code coverage that can be integrated into build cycles:

Measuring Complexity: Visual Studio Code Metrics

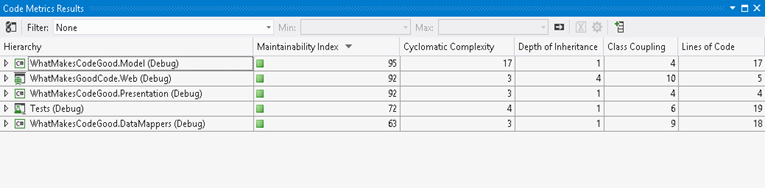

Visual Studio has built-in Code Metrics reporting which, when run on this application, indicate that there are no major problems:

Click Analyze menu, and then click Calculate Code Metrics for Solution.

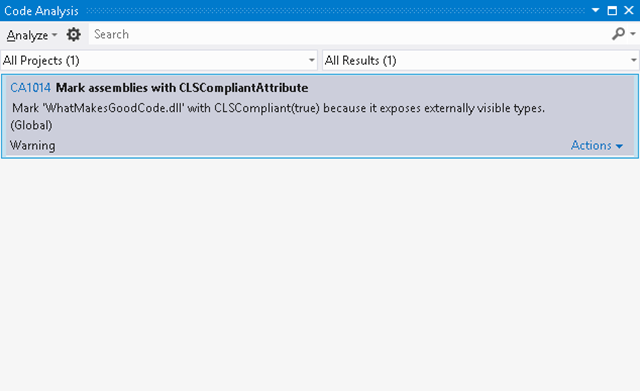

Basic Correctness & Static Code Analysis: Visual Studio Code Analysis

Visual Studio has integrated code analysis features (version dependent). The settings can be accessed via the project properties under “Code Analysis”. As a starting point I use the “Microsoft Basic Design Guideline Rules”:

The analysis doesn’t yield much in this example, since there isn't a lot of managed code:

Style Consistency: StyleCop

Running StyleCop on this project returns some minor complains regarding layout and the ordering of namespace imports:

I add some simple comments to methods and a document header to the code behind and the violation warnings clear.

Note: changes need to be made to the project file to run StyleCop as part of a build. The following post has details of how-to: HowTo: apply StyleCop Settings on several projects.

Feature Rich Code Analysis: NDepend

NDepend is a paid 3rd party tool that analyses assemblies and provides code metrics that incorporates some of the points previously discussed. A diagram I like is Abstractness vs. Instability.

The Abstractness versus Instability Diagram helps to detect which assemblies are potentially painful to maintain (i.e concrete and stable) and which assemblies are potentially useless (i.e abstract and instable).

- Abstractness: If an assembly contains many abstract types (i.e interfaces and abstract classes) and few concrete types, it is considered as abstract.

- Stability: An assembly is considered stable if its types are used by a lot of types of tier assemblies. In these conditions stable means painful to modify.

This diagram indicates that the project is within the green zone, but scores highly on instability. Perhaps an indication that something isn’t ok? This metric suggests that the entire implementation is in one place – which is true!

Initial Conclusion

This example serves to demonstrate that there is a limit to what these automated tools can offer and that there really is no substitute for skilled developers.

Even with this simple example, this data can be used to measure incremental improvements as the code base evolves.

Refactoring to “Better” Code

Having refactored this example code to an MVP pattern approach we can review the same metrics and compare the results. There is no logic in the UI. No direct data access in the code-behind and the application pays better attention to separation of concerns.

I’ve written about the MVP pattern before and rather than repeating all of the code we will just review how the code-behind of the .ASPX now looks with the dependencies abstracted:

- using WhatMakesCodeGood.Model;

- using WhatMakesCodeGood.Presentation;

- using WhatMakesCodeGood.Views;

- namespace WhatMakesGoodCode.Web

- {

- using System;

- using System.Collections.Generic;

- ///<summary>

- /// The products page.

- ///</summary>

- public partial class Default : System.Web.UI.Page, IProductView

- {

- ///<summary>

- /// The _product presenter.

- ///</summary>

- private readonly ProductPresenter _productPresenter;

- ///<summary>

- /// Initializes a new instance of the <see cref="Default"/> class.

- ///</summary>

- public Default(ProductPresenter productPresenter)

- {

- _productPresenter = productPresenter;

- }

- ///<summary>

- /// The page_ load.

- ///</summary>

- ///<param name="sender">

- /// The sender.

- ///</param>

- ///<param name="e">

- /// The page event args.

- ///</param>

- protected void Page_Load(object sender, EventArgs e)

- {

- _productPresenter.Initialize();

- }

- ///<summary>

- /// Sets the products.

- ///</summary>

- public IEnumerable<Product> Products

- {

- set

- {

- this.uiProducts.DataSource = value;

- this.uiProducts.DataBind();

- }

- }

- }

- }

The project also contains the following layers:

- Web – the .ASPX pages and code-behind files

- Presentation – the presenters

- Model – the domain model

- DataMappers – data access code

The Abstractness vs. Instability diagram from NDepend now looks as follows:

This diagram is suggesting that the Web and DataMappers projects are not abstract. It also places the Presentation project closer to “Uselessness” because it contains little code – this will change over time. Generally speaking, the markers are moving towards the centre of the diagram which suggests a better balance of dependencies.

Comparing the Results

Comparing the initial Code Metrics with the refactored version of only the ASP.Net project/code-behind shows the following differences:

Metric | Last Release | Current Release | Description |

| Maintainability Index | 69 | 92 | Higher is better |

| Cyclomatic Complexity | 6 | 3 | Lower is better |

| Class Coupling | 16 | 10 | Lower is better |

| Lines of Code | 13 | 5 | Lower is better (sometimes) |

Overall there are more lines of code for the Presenter, Model and Data Mappers in the solution but with better layering and abstraction, which reminds us that just following numbers doesn’t indicate good design.

Creating a Baseline

The first two comparison were extreme opposites and unrealistic examples for contrast. Starting with the MVP implementation as a baseline we get the following metrics:

Note that this indicates the DataMappers project scores below 70 for the maintainability index. Which flags this as a candidate for refactoring.

For the next release, ideally there would be a limited decrease in the existing metrics and time permitting, an increase in the maintainability index of the under performers.

Refactoring Phase 2

The DataMappers assembly, which was the lowest scoring in terms of maintainability from the benchmark, contains only one class. Based on the hints provided in the metrics, what could be changed to make this class have a higher maintainability index? And does it even matter?

Here is the class in question:

- using System;

- using System.Collections.Generic;

- using System.Data;

- using System.Data.SqlClient;

- using System.Linq;

- using System.Text;

- using System.Threading.Tasks;

- using WhatMakesCodeGood.Model;

- namespace WhatMakesCodeGood.DataMappers

- {

- public class ProductDataMapper : IProductDataMapper

- {

- public IEnumerable<Product> Products

- {

- get

- {

- SqlConnection sqlConnection1 = new SqlConnection(@"Data Source=(localdb)\v11.0;Initial Catalog=GoodCodeStore;Integrated Security=True;MultipleActiveResultSets=True");

- SqlCommand cmd = new SqlCommand("SELECT Id, ProductTitle, Description, Price, Stock, ReleaseDate FROM PRODUCTS", sqlConnection1);

- sqlConnection1.Open();

- List<Product> products = new List<Product>();

- SqlDataReader reader = cmd.ExecuteReader(CommandBehavior.CloseConnection);

- while (reader.Read())

- {

- Product product = new Product();

- product.Id = (int)reader.GetValue(0);

- product.ProductTitle = (string)reader.GetValue(1);

- product.Description = (string)reader.GetValue(2);

- product.Price = (decimal)reader.GetValue(3);

- product.Stock = (short)reader.GetValue(4);

- product.ReleaseDate = (DateTime)reader.GetValue(5);

- products.Add(product);

- }

- sqlConnection1.Close();

- return products;

- }

- }

- }

- }

After refactoring the ProductDataMapper (code snippets to follow) the comparisons are:

Metric | Benchmark | Refactored | Description |

| Maintainability Index | 63 | 84 | Higher is better |

| Cyclomatic Complexity | 3 | 9 | Lower is better |

| Class Coupling | 9 | 15 | Lower is better |

| Lines of Code | 18 | 14 | Lower is better (sometimes) |

What’s changed? Let’s start with the ProductDataMapper class. The dependency with the SqlConnection, SqlCommand and SqlRepeater have been abstracted away:

- namespace WhatMakesCodeGood.DataMappers

- {

- public class ProductDataMapper : AbstractDataMapper<Product>, IProductDataMapper

- {

- protected override string FindAllSelect

- {

- get { return"SELECT Id, ProductTitle, Description, Price, Stock, ReleaseDate FROM PRODUCTS"; }

- }

- protected override Product Map(IDataRecord reader)

- {

- return new Product()

- {

- Id = (int)reader.GetValue(0),

- ProductTitle = (string)reader.GetValue(1),

- Description = (string)reader.GetValue(2),

- Price = (decimal)reader.GetValue(3),

- Stock = (short)reader.GetValue(4),

- ReleaseDate = (DateTime)reader.GetValue(5)

- };

- }

- }

- }

The code for the abstract class AbstractDataMapper:

- namespace WhatMakesCodeGood.DataMappers

- {

- public abstract class AbstractDataMapper<T>

- {

- protected abstract string FindAllSelect { get; }

- protected IDbConnection Connection

- {

- get

- {

- return new SqlConnection(ConfigurationManager.ConnectionStrings["WhatMakesCodeGood"].ConnectionString);

- }

- }

- public virtual IEnumerable<T> FindAll()

- {

- SqlCommand cmd = new SqlCommand(FindAllSelect, Connection as SqlConnection);

- List<T> items = new List<T>();

- SqlDataReader reader = cmd.ExecuteReader(CommandBehavior.CloseConnection);

- while (reader.Read())

- {

- items.Add(Map(reader));

- }

- return items;

- }

- protected abstract T Map(IDataRecord reader);

- }

- }

Since there was only once instance of the ProductDataMapper, this simple example doesn’t convey the full potential impact of this refactor

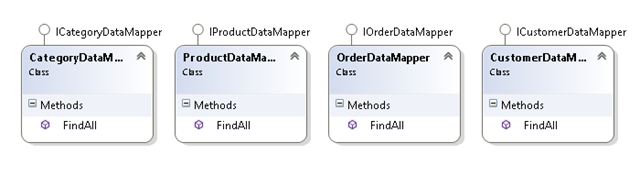

Consider if there were four different variants of the initial un-refactored ProductDataMapper class:

Refactoring these classes and abstracting away the dependencies should demonstrate a more profound impact on the metrics. The refactored class now look as per the class diagram:

Here is how the metrics compare:

Metric | Last Release | Current Release | Description |

| Maintainability Index | 68 | 88 | Higher is better. Indicates 20% improvement. |

| Cyclomatic Complexity | 12 | 18 | Lower is better. |

| Class Coupling | 15 | 20 | Lower is better. This increases slightly, since the abstract class is introduced. |

| Lines of Code | 48 | 26 | Lower is better (sometimes). Significant reduction, even for only 4 classes. |

The metric suggests a 20% improvement. However, in my opinion the improvement is 1000% which suggests there is still a requirement for developer skill.

Calculating Maintainability

The formula used by the Visual Studio Code Metrics is:

MAX(0,(171 - 5.2 * ln(Halstead Volume) - 0.23 * (Cyclomatic Complexity) - 16.2 * ln(Lines of Code))*100 / 171)

- Halstead: measurable properties of software, and the relations between them. Think dependencies!

- Cyclomatic Complexity: directly measures the number of linearly independent paths through a program's source code. Think of lots of nested IF statements!

- Lines of Code: the lines of code within a class/project/application.

You can read more about the Maintainability Index Range and Meaning.

Build Integration

There are many combinations of how these tools can be used i.e. Team City with ReSharper. TFS with StyleCop and Code Analysis reporting to name a few.

- Running Code Metrics as Part of a TFS 2010 Build – The Poor Man’s Way

- TeamCity

- NDepend can be integrated with a bunch of continuous integration tools like TFS, CruiseControl.net etc

Conclusion

These tools won't write great code for you and are little more than a productivity tool. When integrated as part of a release cycle these metrics offer a very useful "heads up display" of how a code base is evolving (degrading?). These tools can help you identify the problem area more efficiently, but they won't tell you how to resolve it.

Disclaimer: I was given a free NDepend license for developer evaluation. I’m not associated with NDepend. I just think it has some nice features that are useful.

![AbstractnessVSInstability[6] AbstractnessVSInstability[6]](http://lh5.ggpht.com/-STQ0ioduuzY/UhOJdavs0XI/AAAAAAAAA7E/WysyxZ-bWCM/AbstractnessVSInstability6_thumb3.png?imgmax=800)